Chapter 5 Introduction to Bootstrapping

This Chapter supplements the material in the first part of Lecture 7.

In this chapter, we will learn the core concepts of bootstrapping. That is, creating synthetic sampling distributions through multiple resampling (with replacement) of a single sample.

The basic process is fairly simple, once you have your original sample, and has the following characteristics:

- A bootstrap sample has an equal probability of randomly drawing any of the original sample elements (data points).

- Each element can be selected more than once - because the sample is done with replacement.

- Each resampled data set (the new sample) is the same size as the original one.

First, I will demonstrate the basic principle.

Recall from the last chapter that there was a simulated data set of 498 people, with variables representing smoking, biking, and heart disease.

We treated this as the population and then sampled from it to demonstrate uncertainty at different sample sizes.

So, let’s take the sample of 50 that we drew from that ‘population’ of 498, and imagine that we got this sample by (for example) doing a survey of the population (of 498), and this is our data set for analysis.

First we will remind ourselves of the properties and distribution of our sample, the median, mean, and distribution:

## [1] 15.22415## [1] 14.31424

Ok, there we go. Remember, this sample is our ‘data set’ for analysis. Remember, it represents a sample of 50 cases from a population of 498.

We know the median and mean, but we have no real indication of the uncertainty in those estimates. What I mean is, without information about the population mean and median (which of course we would not have in a real-life sampling situation), we cannot know how close the sample median and mean are to the population mean and median.

In order to estimate that information, we will eventually use the bootstrap method. For now, we are just going to demonstrate the basic idea.

So, what we do now is draw another random sample of 50. However, we don’t draw it from the population, we instad draw the new sample of 50 from our existing sample of 50.

That sounds weird to many people, and at first they often say ‘but how can it be different from your original 50??’. The trick is that each time we draw a data point, we replace it back, so we are always drawing our sample from the full 50. This is called sampling with replacement.

In this way, the new sample can only contain values which were in the original sample, but can contain different frequencies of those values. Or in other words, each value can occur many different times, and that number of times may be different to the original sample. So the distribution of values in this new sample will be different to the original sample, and the statistics will therefore also be different.

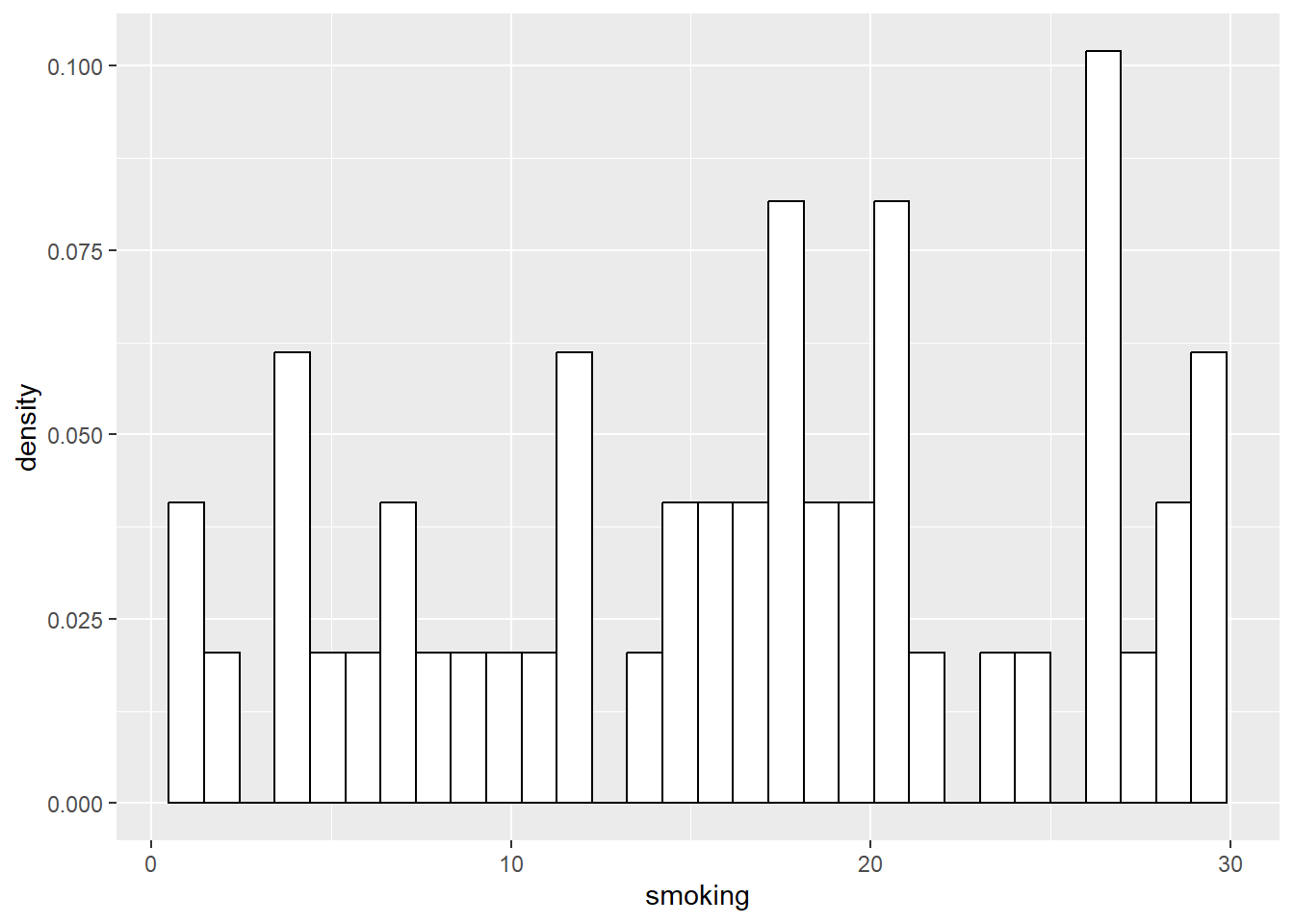

Let’s draw this new sample, take the median and mean of the sample, and plot it:

## # A tibble: 50 × 4

## ...1 biking smoking heart.disease

## <dbl> <dbl> <dbl> <dbl>

## 1 260 37.7 26.3 11.2

## 2 232 10.4 16.0 14.1

## 3 36 18.8 16.8 15.1

## 4 459 40.3 1.60 6.79

## 5 447 33.2 17.6 12.1

## 6 11 51.8 14.4 6.43

## 7 74 49.4 23.2 9.47

## 8 329 15.1 3.28 12.7

## 9 288 45.5 25.8 10.9

## 10 260 37.7 26.3 11.2

## # ℹ 40 more rows## [1] 16.01317## [1] 14.77138

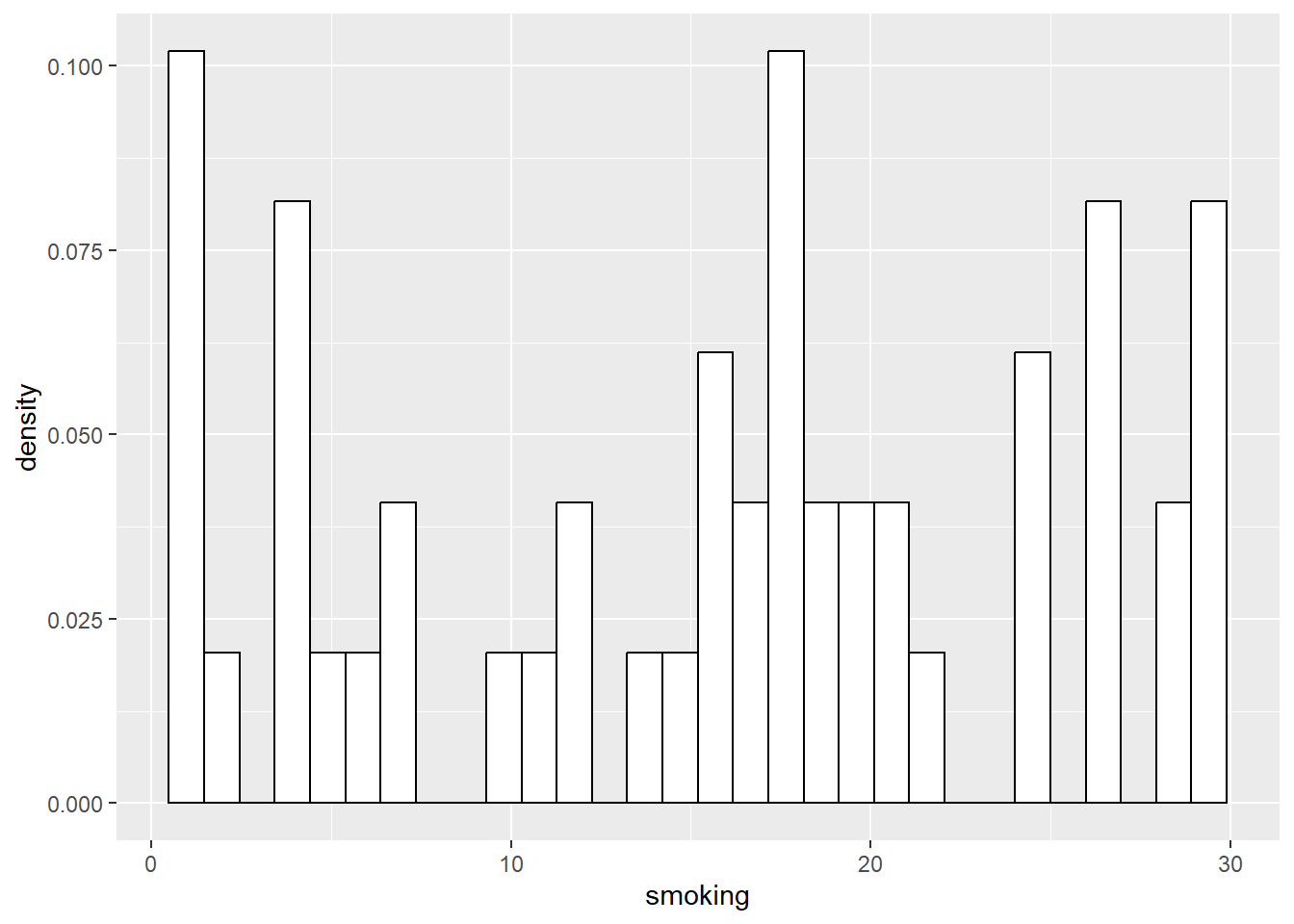

Marvelous! Now, for the purpose of example, let’s draw two more of these resamples from the original 50, take their median and mean, and plot the distributions…

## # A tibble: 50 × 4

## ...1 biking smoking heart.disease

## <dbl> <dbl> <dbl> <dbl>

## 1 260 37.7 26.3 11.2

## 2 92 26.9 16.7 13.5

## 3 459 40.3 1.60 6.79

## 4 107 49.8 3.57 6.26

## 5 36 18.8 16.8 15.1

## 6 274 55.4 17.2 7.23

## 7 165 35.3 9.15 9.75

## 8 385 31.7 28.7 13.0

## 9 92 26.9 16.7 13.5

## 10 385 31.7 28.7 13.0

## # ℹ 40 more rows## [1] 14.43512## [1] 13.57516

## # A tibble: 50 × 4

## ...1 biking smoking heart.disease

## <dbl> <dbl> <dbl> <dbl>

## 1 447 33.2 17.6 12.1

## 2 17 61.7 16.8 5.44

## 3 52 41.7 13.1 9.09

## 4 178 31.2 5.57 9.86

## 5 92 26.9 16.7 13.5

## 6 153 55.8 2.25 4.50

## 7 74 49.4 23.2 9.47

## 8 431 64.0 29.6 8.57

## 9 349 68.0 13.3 4.07

## 10 183 41.2 4.15 7.59

## # ℹ 40 more rows## [1] 13.92667## [1] 14.7087

Now, if we return to the slides, we can build a table using these mean and median values. Of course, the slide deck will have slightly different values, since it’s based on a different run of the resampling process, but the principle is the same.

So, this is the basic idea of bootstrapping. We sample with replacement from our original sample, many many times. We did 3 here manually, but we generally use a program to do this many more times, such as a thousand or more.

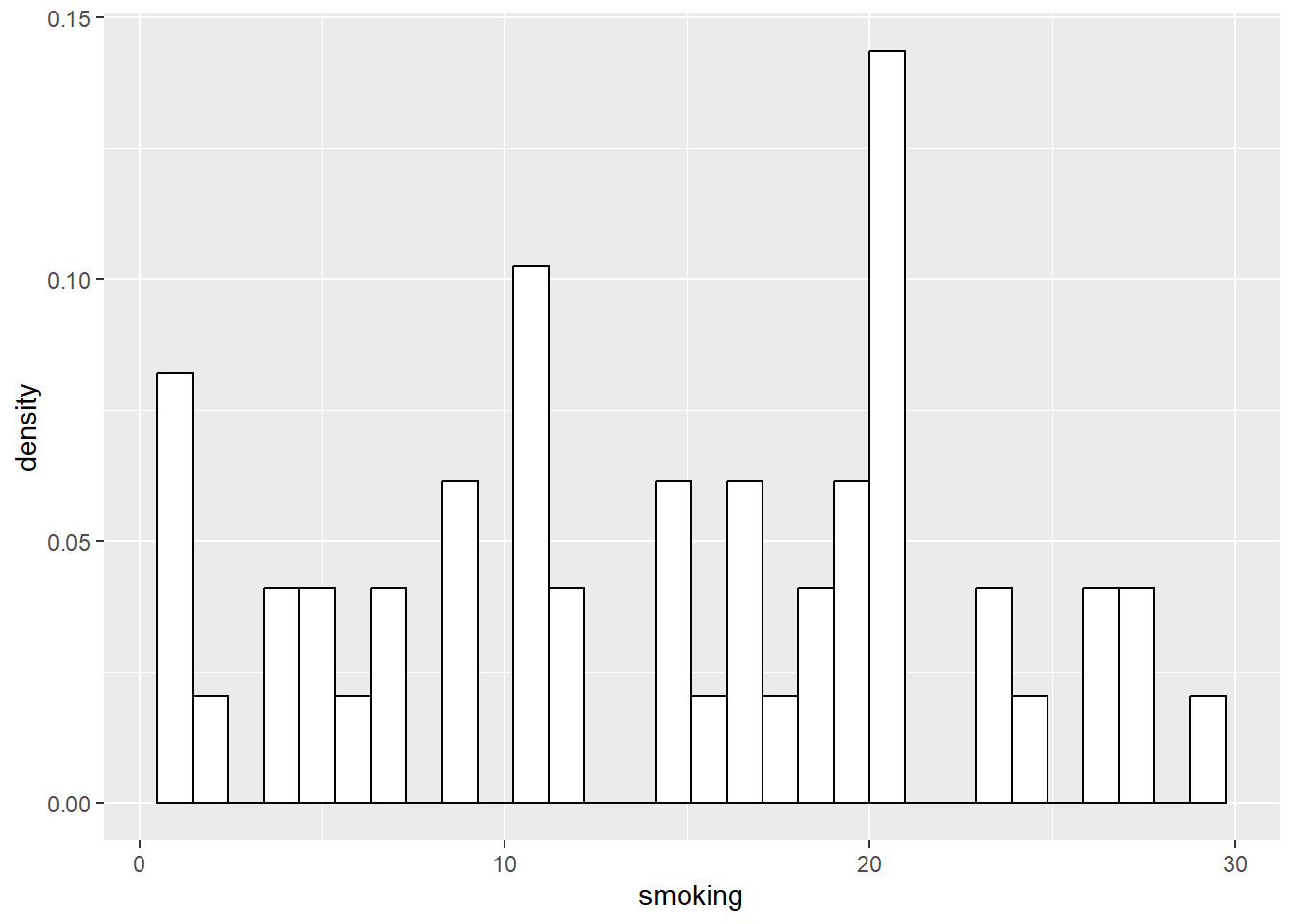

5.1 Bootstrapping in the Context of Previous Examples

To further reinforce the point, let’s now place ourselves in the position of three different researchers, each of varying levels of enthusiasm, and all three are researching the same population of 498 people that we have already explored in the last few examples.

Researcher 1 is a little like me as a Ph.D. student, and maybe more interested in ‘experiencing life’. So, he has little time to actually collect data, and not much more enthusiasm for it. In the end, he manages to take a sample of 10 people from the population of 498.

Researcher 2 is a bit more enthusiastic, and gets a sample of 50.

Researcher 3 is fairly conscientious, and takes a sample of 200 from the population of 498.

Now, what we can do, is run 1000 bootstrap replications of each of these varying-sized subsamples of the population, to see what might happen:

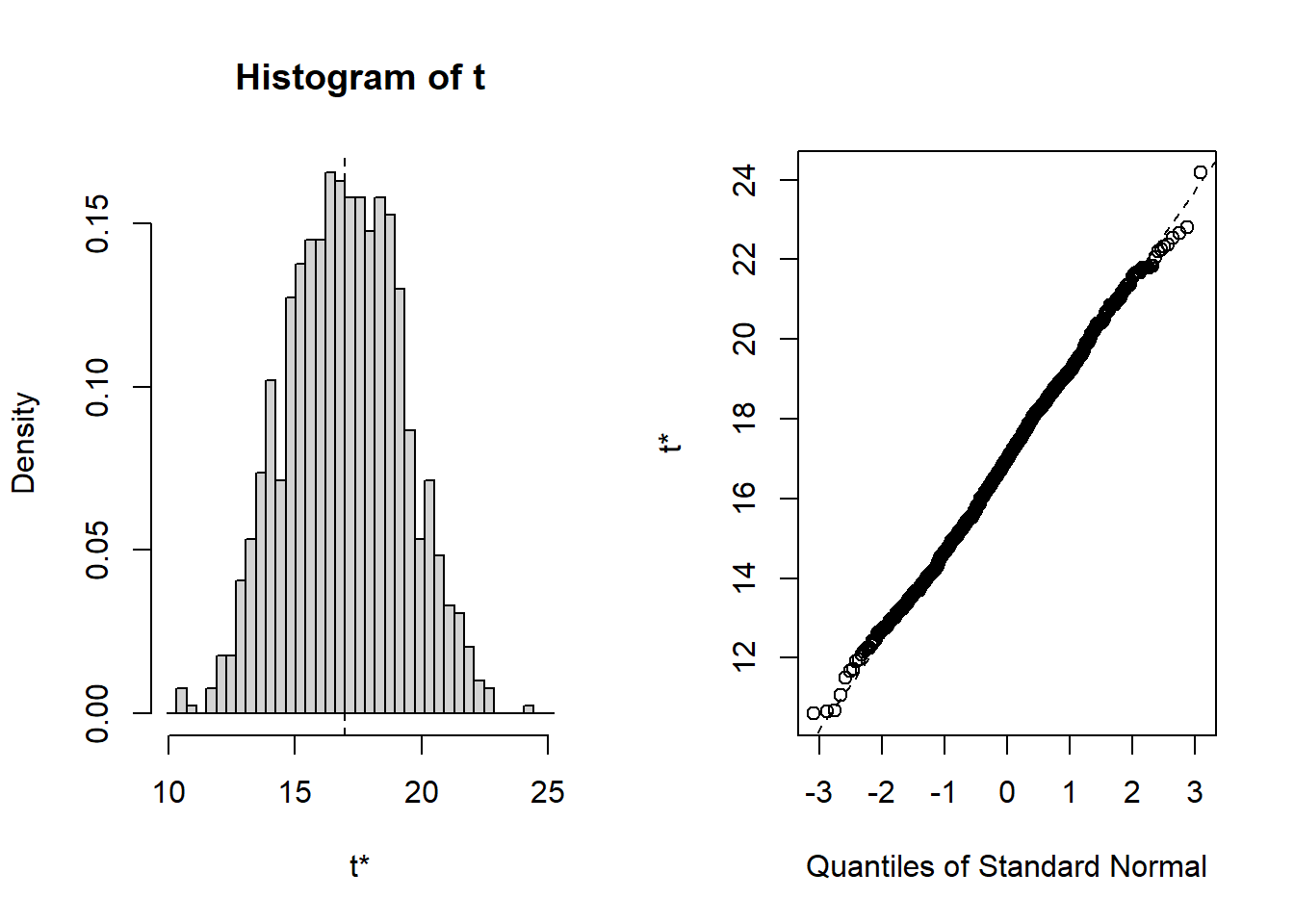

First, the 10:

##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = sub.10, statistic = f1, R = 1000)

##

##

## Bootstrap Statistics :

## original bias std. error

## t1* 16.48825 0.2391972 3.054002

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 1000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = results, type = "norm")

##

## Intervals :

## Level Normal

## 95% (10.26, 22.23 )

## Calculations and Intervals on Original ScaleNow let’s do it for the other two subsamples of n=50, and n=200

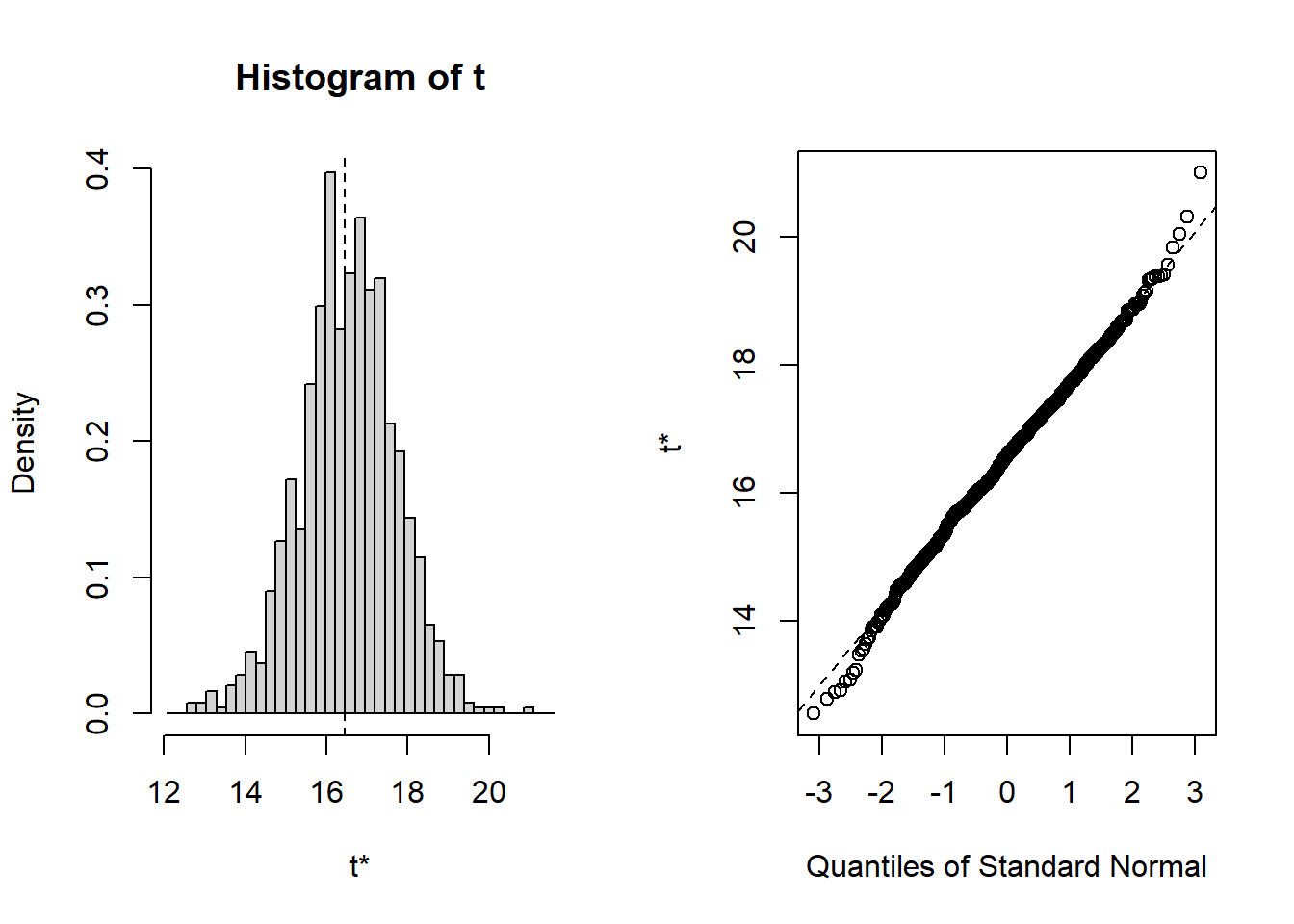

##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = sub.50, statistic = f1, R = 1000)

##

##

## Bootstrap Statistics :

## original bias std. error

## t1* 14.31424 -0.006371521 1.042151

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 1000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = results, type = "norm")

##

## Intervals :

## Level Normal

## 95% (12.28, 16.36 )

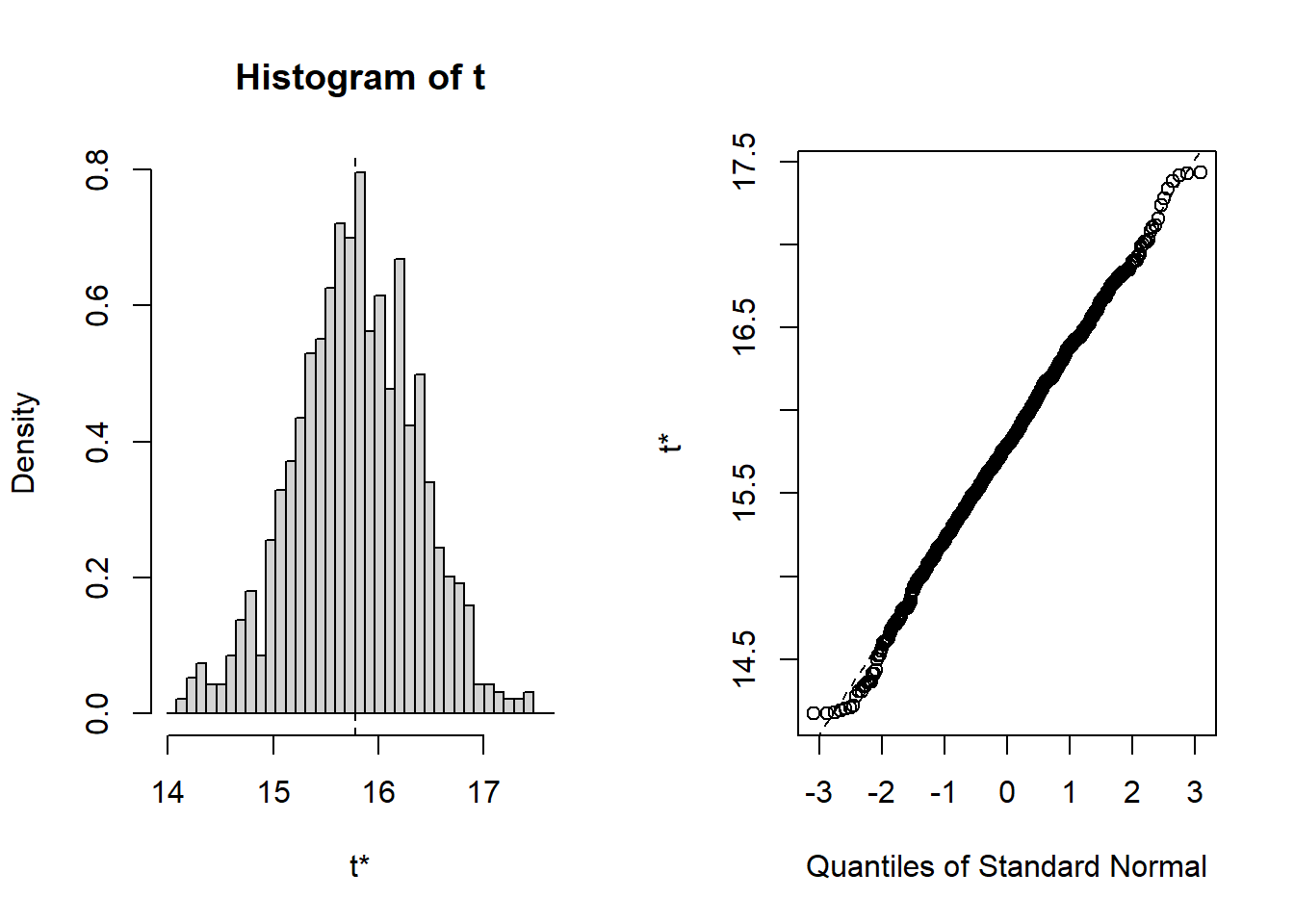

## Calculations and Intervals on Original Scale##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = sub.200, statistic = f1, R = 1000)

##

##

## Bootstrap Statistics :

## original bias std. error

## t1* 15.19752 0.04032617 0.5847005

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 1000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = results, type = "norm")

##

## Intervals :

## Level Normal

## 95% (14.01, 16.30 )

## Calculations and Intervals on Original ScaleWe’ll now build a table with these values back in the slide deck. IAgain, remember the values in the slide deck will differ from these due to the randomness of the process.

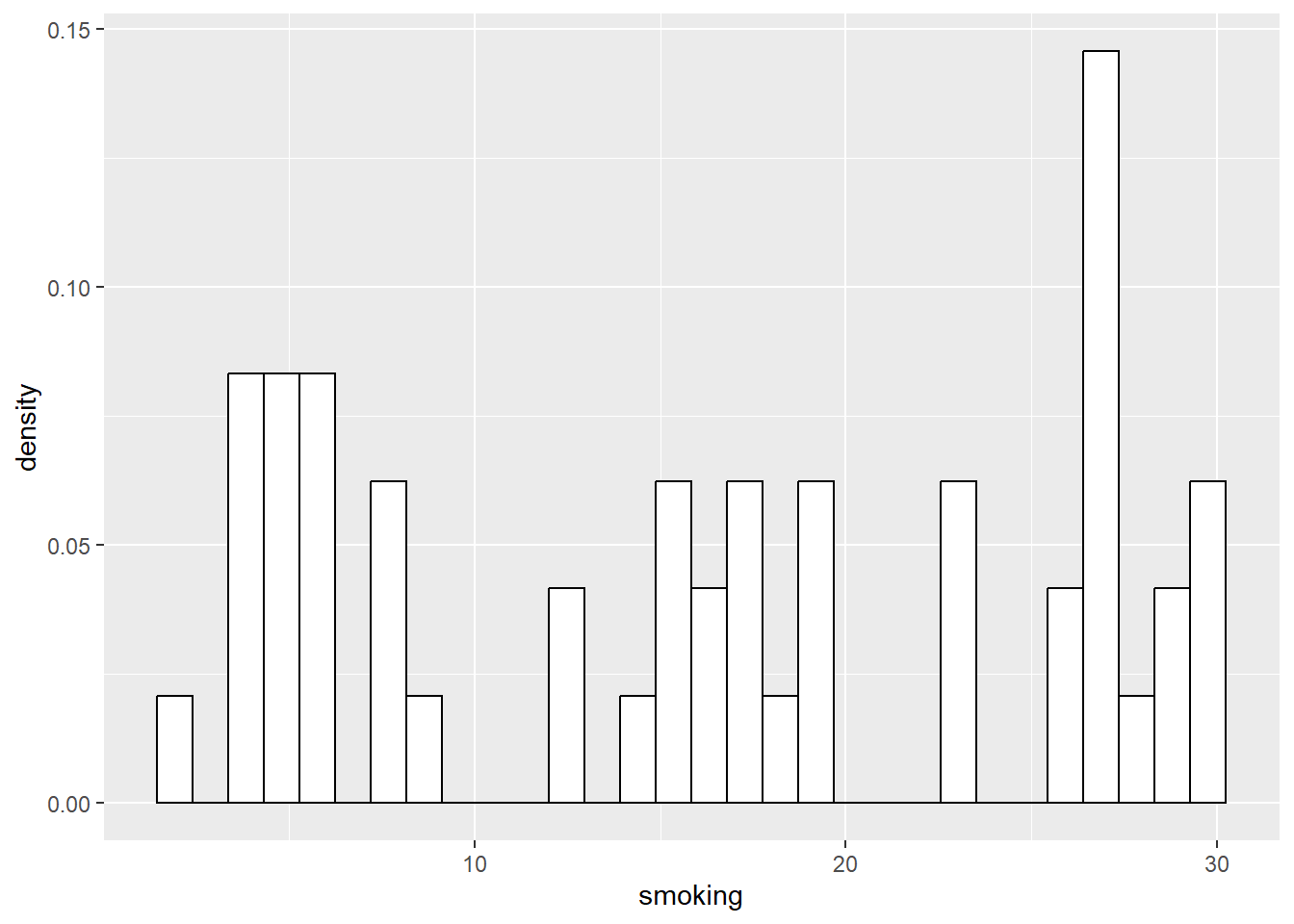

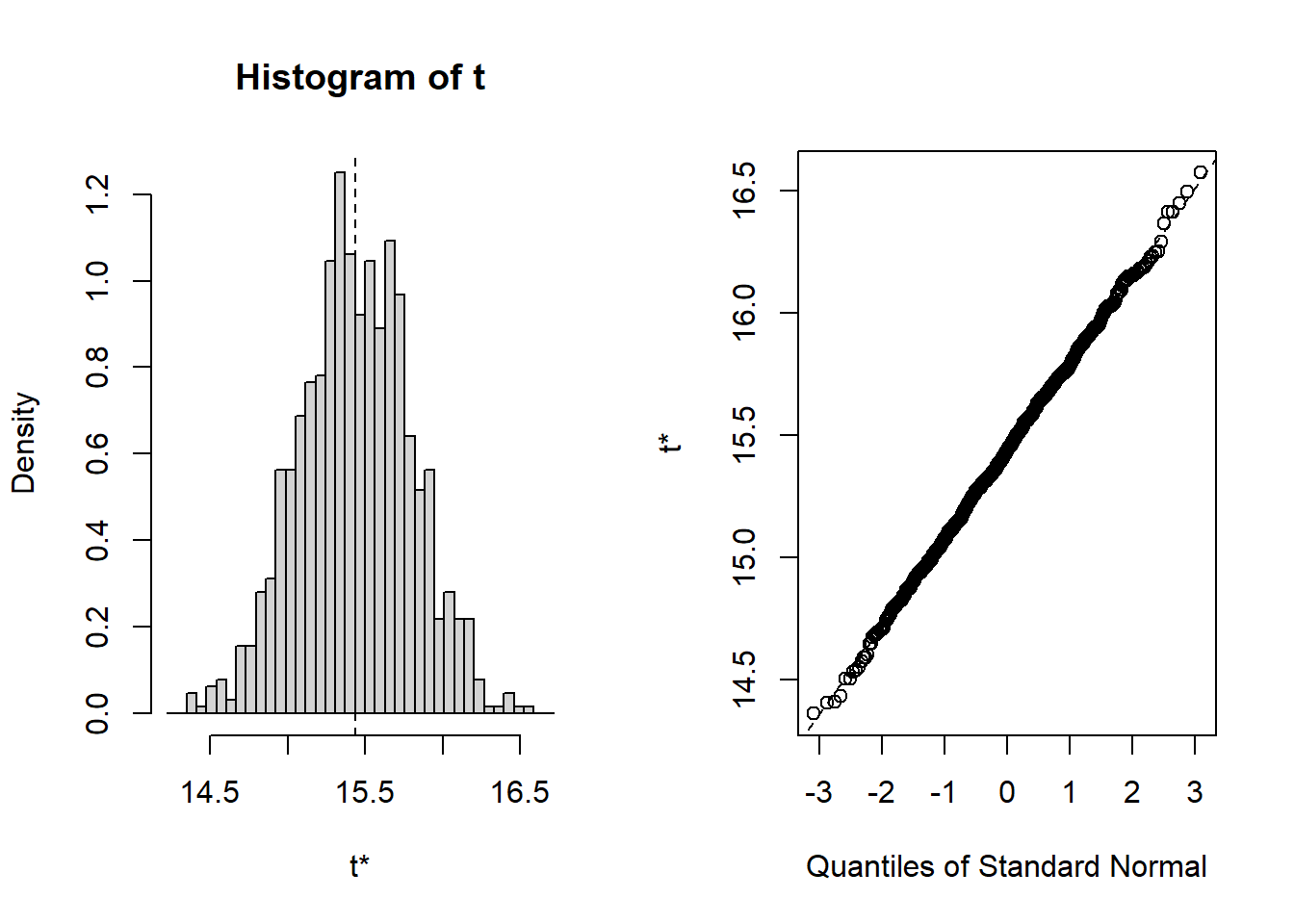

5.1.1 Bootstrapping the Original Sample…

Now, let’s shift our minds a bit, and consider that the data set of 498 actually represents a sample of a larger population (remember from the last chapter, it’s simulated, but meant to represent a sample from the population).

So, let’s bring in Researcher 4, the most conscientious of all. She is the one who manages to take a sample of 498 people from the population. And, finally, we can bootstrap the original full sample of 498:

##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = Heart, statistic = f1, R = 1000)

##

##

## Bootstrap Statistics :

## original bias std. error

## t1* 15.43503 0.001437866 0.359051

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 1000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = results, type = "norm")

##

## Intervals :

## Level Normal

## 95% (14.73, 16.14 )

## Calculations and Intervals on Original ScaleThis is a very nice set of results, which can tell us many interesting things. So let’s go back to the slides…..

5.2 Bootstrapping Other Stuff…

We have so far only bootstrapped the mean. However, the basic principle can be applied to virtually any statistical estimate. So, we can revisit some of our prior analyses, and use the bootstrap method to quantify the uncertainty in the estimates that we previously accepted without really thinking too hard about them.

5.2.1 Correlations

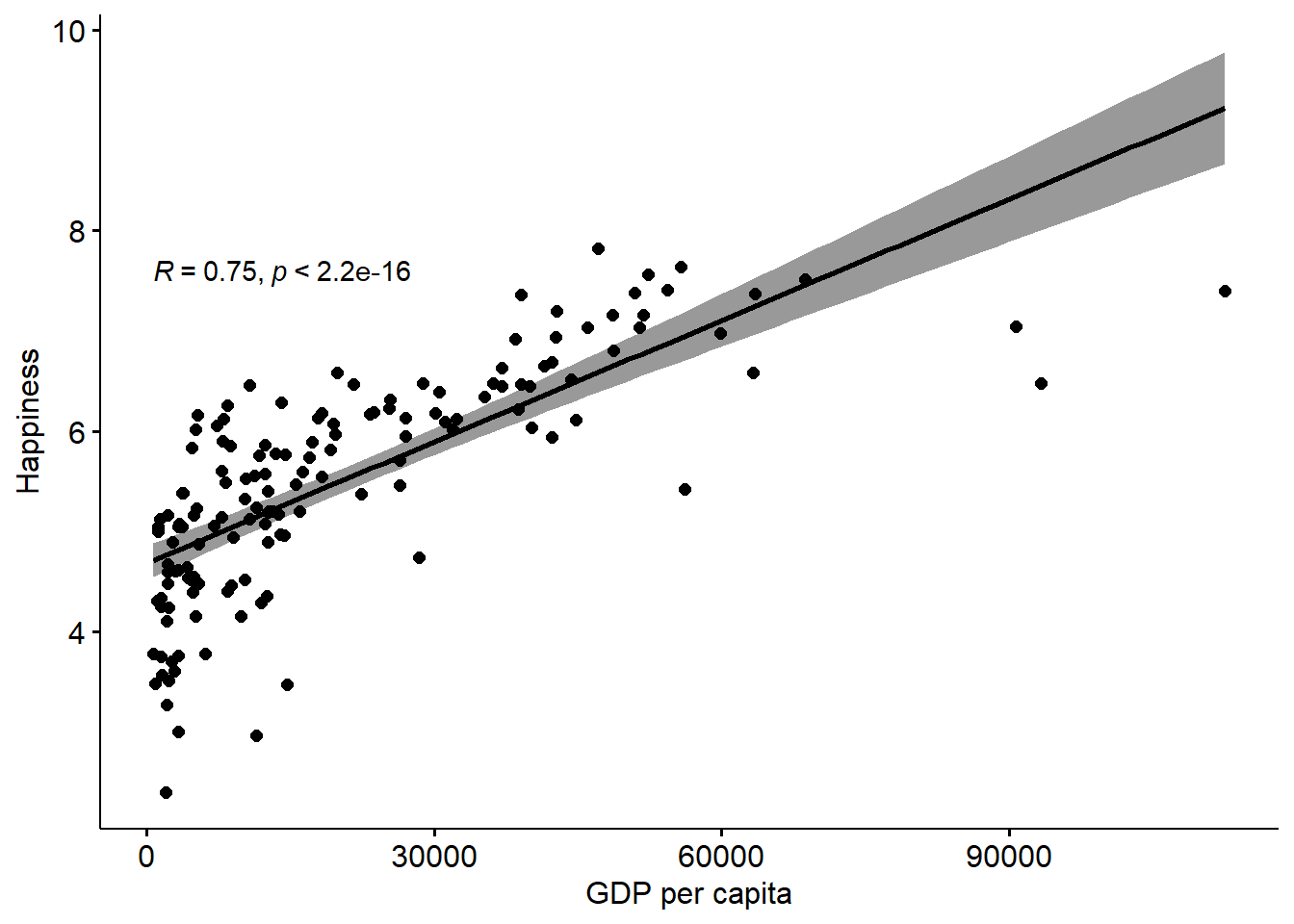

First, let’s revisit our recent correlation analysis of Happiness and GDP per capita.

## # A tibble: 6 × 4

## Country Happiness GDPpc Pop

## <chr> <dbl> <dbl> <dbl>

## 1 Afghanistan 2.4 1971 38972236

## 2 Albania 5.2 13192 2866850

## 3 Algeria 5.12 10735 43451668

## 4 American Samoa NA NA 46216

## 5 Andorra NA NA 77723

## 6 Angola NA 6110 33428490## vars n mean sd median trimmed mad

## Country* 1 249 125.00 72.02 125.00 125.00 91.92

## Happiness 2 153 5.49 1.12 5.53 5.52 1.16

## GDPpc 3 197 20463.88 20717.34 12655.00 17037.01 13338.95

## Pop 4 242 59178643.60 331869505.09 5596196.00 12318073.38 8185922.38

## min max range skew kurtosis se

## Country* 1.0 2.490000e+02 2.480000e+02 0.00 -1.21 4.56

## Happiness 2.4 7.820000e+00 5.420000e+00 -0.26 -0.38 0.09

## GDPpc 731.0 1.125570e+05 1.118260e+05 1.58 2.55 1476.05

## Pop 809.0 4.663087e+09 4.663086e+09 11.65 152.44 21333379.77If we run the same analysis as in Chapter 2, we’ll get the same results: Correlation R = 0.75

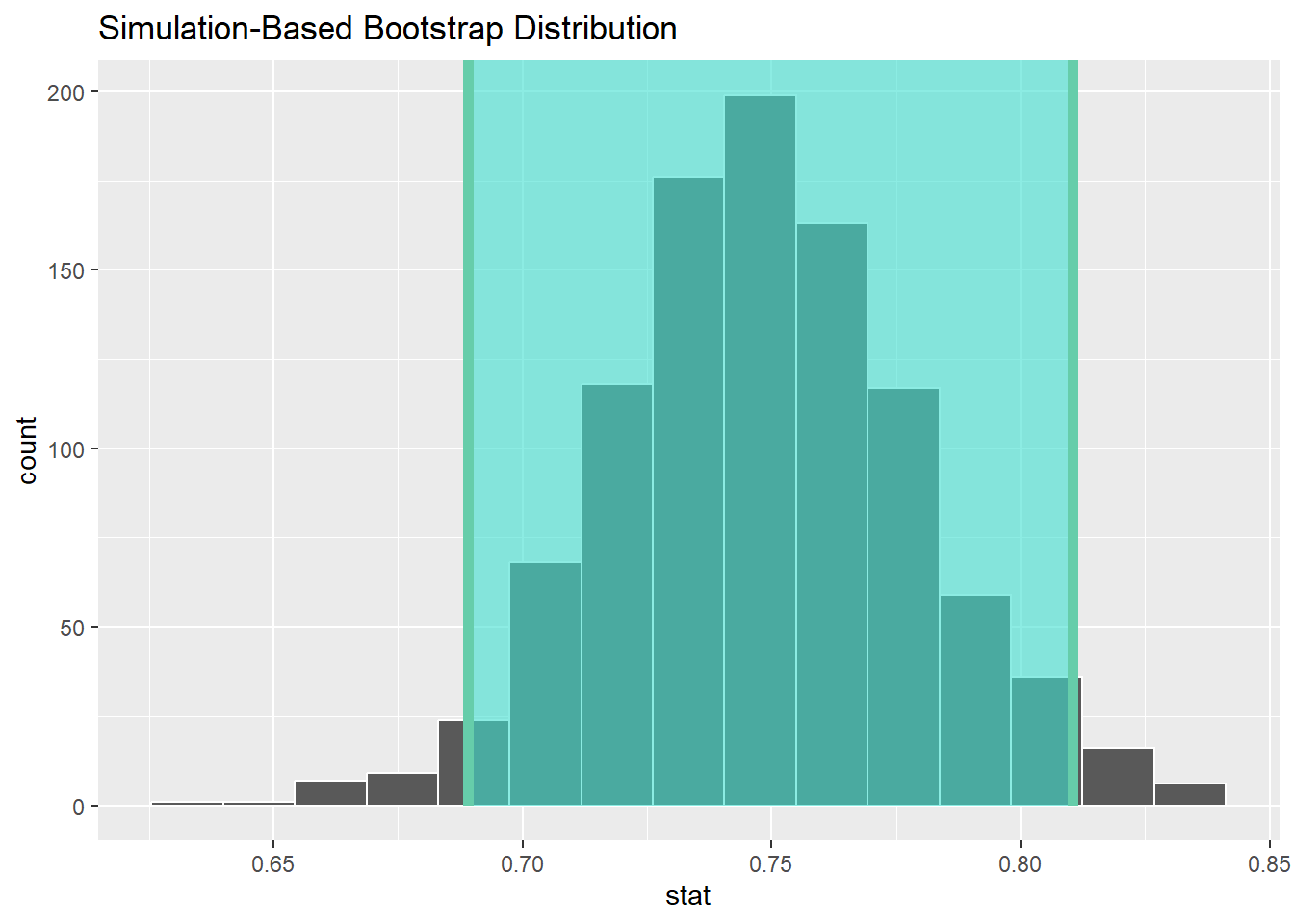

Now, let’s take uncertainty into account, by bootstrapping that correlation and creating some confidence intervals.

## # A tibble: 1 × 2

## lower_ci upper_ci

## <dbl> <dbl>

## 1 0.689 0.811## Response: Happiness (numeric)

## Explanatory: GDPpc (numeric)

## # A tibble: 1 × 1

## stat

## <dbl>

## 1 0.745So, you can see the correlation is 0.75 with a 95% confidence interval of 0.69 - 0.81

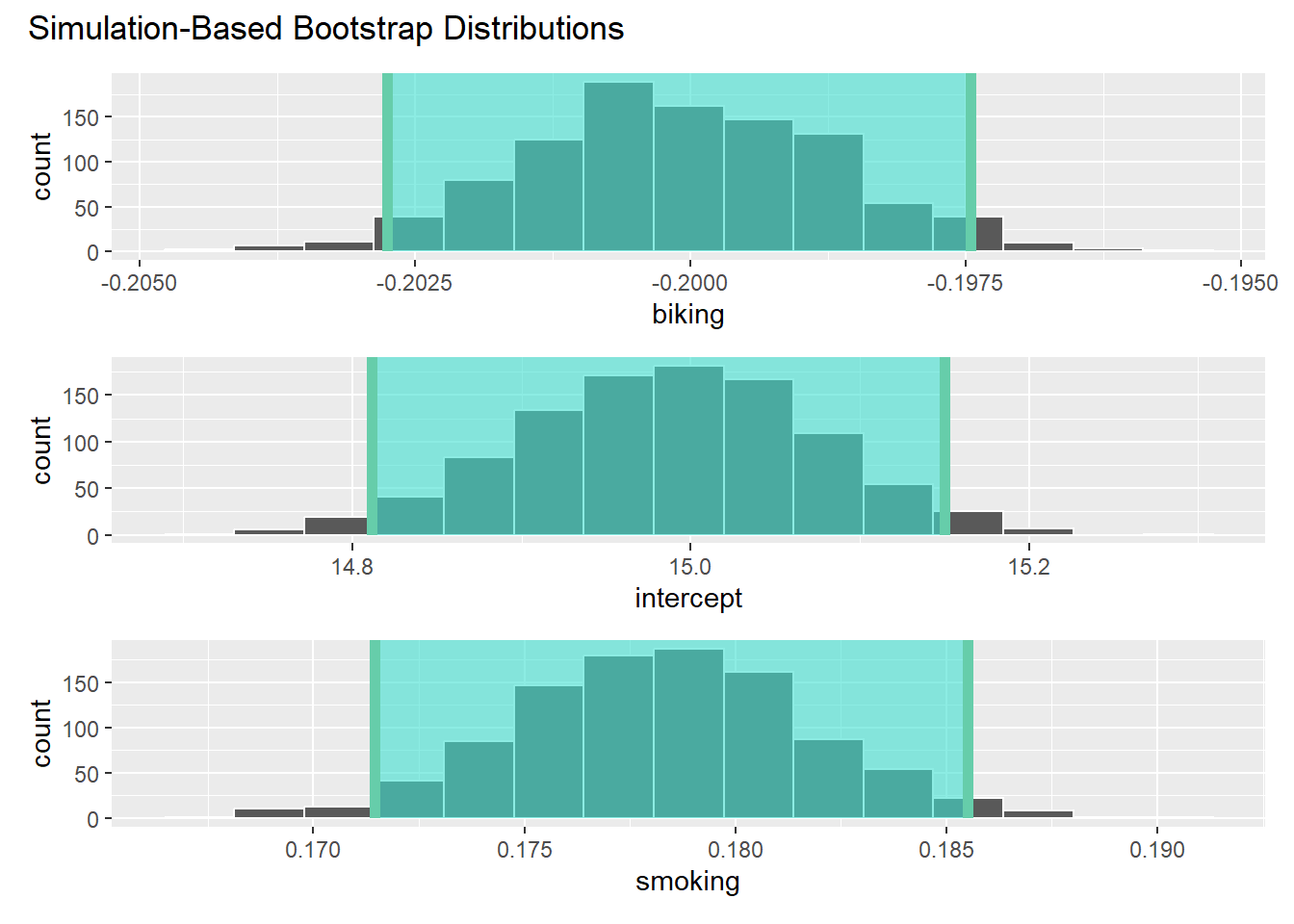

Now, let’s extend this to the multiple regression case we have previously used, examining the relationships between smoking, biking, and heart disease.

## # A tibble: 6 × 4

## ...1 biking smoking heart.disease

## <dbl> <dbl> <dbl> <dbl>

## 1 1 30.8 10.9 11.8

## 2 2 65.1 2.22 2.85

## 3 3 1.96 17.6 17.2

## 4 4 44.8 2.80 6.82

## 5 5 69.4 16.0 4.06

## 6 6 54.4 29.3 9.55## vars n mean sd median trimmed mad min max range

## ...1 1 498 249.50 143.90 249.50 249.50 184.58 1.00 498.00 497.00

## biking 2 498 37.79 21.48 35.82 37.71 27.51 1.12 74.91 73.79

## smoking 3 498 15.44 8.29 15.81 15.47 10.86 0.53 29.95 29.42

## heart.disease 4 498 10.17 4.57 10.39 10.18 5.42 0.55 20.45 19.90

## skew kurtosis se

## ...1 0.00 -1.21 6.45

## biking 0.07 -1.22 0.96

## smoking -0.04 -1.12 0.37

## heart.disease -0.03 -0.93 0.20Here, we need to calculate multiple confidence intervals as we have multiple estimates.

## # A tibble: 3 × 2

## term estimate

## <chr> <dbl>

## 1 intercept 15.0

## 2 smoking 0.178

## 3 biking -0.200## # A tibble: 3 × 3

## term lower_ci upper_ci

## <chr> <dbl> <dbl>

## 1 biking -0.203 -0.197

## 2 intercept 14.8 15.1

## 3 smoking 0.171 0.186It’s worth reflecting on exactly what these conflidence intervals mean, and to do so, we can move back to the slides…